Table of Contents

Classification

MLP

Multi Layers Perceptron, PMC (Perceptron Multi-Couches)

Gradient Backpropagation

Rétropropagation du Gradient

Stochastic

with Inertia

Simulated Annealing

Recuit Simulé

Newton

Objective

Converges faster than gradient descent

Quick Def

Second order

RBFNN

Radial Basis Functions Neural Networks

First method

Objective

You have to chose k

Quick Def

k-means then gradient descent

Second method

Quick Def

incremental addition of neurons then exact method

SVM

Support Vectors Machine

Decision tree

arbre de décision

ID3

Quick Def

based on entropy

k-nearest neighbors

k plus proches voisins

Boosting

[Freund,Schapire, 1995]

Quick Def

Consists in combining a lot of weak classifiers to get a strong one.

Boosting by majority

AdaBoost

ADAptive BOOSTing, [Freund,Schapire, 1996]

The first and standard version is refered as Discrete AdaBoost.

Quick Def

Greedy approach

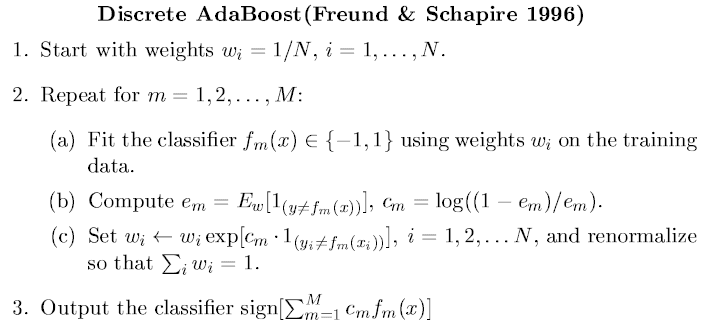

Discrete AdaBoost

[Freund,Schapire, 1996]

References

Full Definition

Real AdaBoost

[Friedman,Hastie,Tibshirani, 1998]

References

Full Definition

LogitBoost

[Friedman,Hastie,Tibshirani, 1998]

References

Full Definition

Gentle AdaBoost

[Friedman,Hastie,Tibshirani, 1998]

References

Full Definition

Probabilistic AdaBoost

[Friedman,Hastie,Tibshirani, 1998]

References

FloatBoost

Objective

AdaBoost is a sequential forward search procedure using the greedy selection strategy to minimize a certain margin on the training set. A crucial heuristic assumption used in such a sequential forward search procedure is the monotonicity (i.e. that addition of a new weak classifier to the current set does not decrease the value of the performance criterion). The premise offered by the sequential procedure in AdaBoost breaks down when this assumption is violated. Floating Search is a sequential feature selection procedure with backtracking, aimed to deal with nonmonotonic criterion functions for feature selection

Full Definition

AdaBoost.Reg

[Freund,Schapire, 1997]

Objective

An extension of AdaBoost to regression problems

References

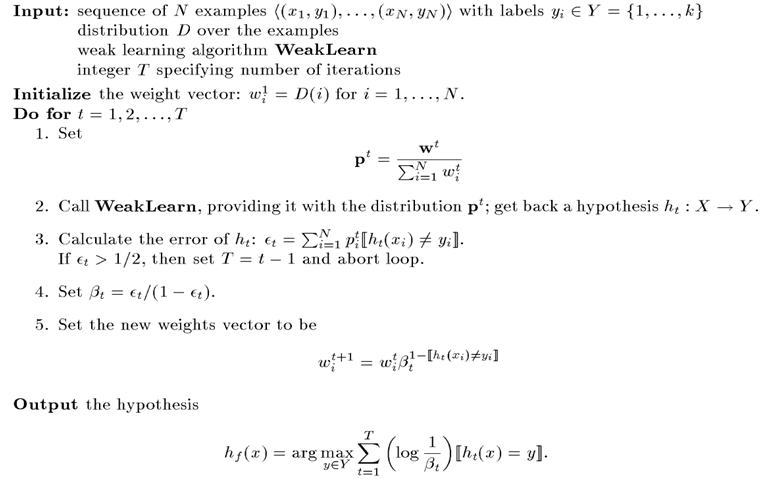

Multiclass AdaBoost.M1

[Freund,Schapire, 1997]

Objective

Basic extension of AdaBoost to multiclass problems. A weak classifier needs to have an error rate less than 1/2, which is stronger than random guessing (1/k) and is often too difficult to obtain.

Quick Def

A weak classifier associates to an example a label in {0,…,k}.

References

Full Definition

Multiclass AdaBoost.M2

[Freund,Schapire, 1997]

Objective

Tries to overcome the difficulty of AdaBoost.M1 by extending the communication between the boosting algorithm and the weak learner. The algorithm not only focuses on hard instances, but also on classes which are hard to distinguish.

Quick Def

A weak classifier associates to an example a vector in [0,1]^k, and the pseudo-loss takes also into account weights according to the performance of the weak classifier over the different classes for the same example.

References

Full Definition

Multilabel AdaBoost.MR

[Schapire,Singer, 1998]

References

Full Definition

Multilabel AdaBoost.MH

[Schapire,Singer, 1998]

References

Full Definition

Multiclass AdaBoost.MO

[Schapire,Singer, 1998]

References

Multiclass AdaBoost.OC

[Schapire, 1997]

References

Multiclass AdaBoost.ECC

[Guruswami,Sahai, 1999]

References

AdaBoost.M1W

[Eibl,Pfeiffer, 2002]

GrPloss

[Eibl,Pfeiffer, 2003]

References

BoostMA

[Eibl,Pfeiffer, 2003]

References

SAMME

Stagewise Additive Modeling using a Multi-class Exponential loss function, [Zhu,Rosset,Zou, 2006]

References

GAMBLE

Gentle Adaptive Multiclass Boosting Learning, [Huang,Ertekin,Song, 2005]

References

UBoost

Quick Def

Uneven loss function + greedy

LPBoost

Objective

Not greedy, exact.

References

TotalBoost

TOTALly corrective BOOSTing, [Warmuth,Liao,Ratsch, 2006]

References

RotBoost

[Li,Abu-Mostafa,Pratap, 2003]

References

alphaBoost

[Li,Abu-Mostafa,Pratap, 2003]

References

MILBoost

(Multiple Instance Learning BOOSting), [Viola,Platt, 2005]

References

CGBoost

Conjugate Gradient BOOSTing, [Li,Abu-Mostafa,Pratap, 2003]

References

Bootstrap Aggregating

Cascades of detectors

Quick Def

A cascade of classifiers is a degenerated decision tree, where at each stage a classifier is trained to detect almost all objects of interest, while rejecting a certain fraction of the non-object patterns (eg eliminates 50% of non-object patterns and falsely eliminates 0.1%, then after 20 stages it can be expected a false alarm rate of 0.5^20 and a hit rate of 0.999^20). It enables to focus attention on certain regions and dramatically increases speed.

Trees of detectors

Regression

- MLP (Multi Layers Perceptron)

- RBFNN (Radial Basis Functions Neural Network)

- SVR (Support Vectors Regressor)